Agentic AI-Assisted Insurance Claims Experience

Case StudyHow might AI and human judgment work together to create a faster, more transparent insurance claims process?

Context

In traditional insurance workflows, the claims process often suffers from slow response times, fragmented communication, and a lack of transparency between customers and adjusters. While AI has begun to accelerate certain tasks — such as image recognition and risk prediction — many systems still fail to bridge the trust gap between automation and human decision-making.

This case study explores what happens when AI is reframed not as a replacement for human expertise, but as a collaborative assistant — one that supports empathy, reasoning, and accountability across both sides of the insurance experience.

Goal

Design an AI-integrated workflow that enhances trust, clarity, and efficiency for both drivers and claims adjusters by:

Enabling drivers to receive immediate, transparent feedback through AI-assisted damage assessment.

Empowering adjusters with explainable, editable insights that strengthen human judgment rather than override it.

Establishing a closed feedback loop where human validation continuously refines AI confidence and decision quality.

Outcome

The result is a dual-interface system:

A driver-facing flow that provides emotional reassurance and step-by-step guidance after an accident.

An adjuster-facing dashboard that surfaces AI rationale, confidence scoring, and editable cost breakdowns — culminating in an approval milestone that celebrates human oversight.

Together, these experiences form a prototype of what could be the next generation of human-AI collaboration in insurance claims.

The Problem

Claims are emotionally charged, data-heavy, and slow to resolve. While AI promises speed and automation, trust collapses the moment users can’t see how decisions are made. Adjusters face information overload, inconsistent evidence quality, and opaque AI estimates — all while customers wait anxiously for updates.

The challenge wasn’t to automate. It was to create clarity — for both the customer and the human expert in the loop.

Research: User Empathy Mapping

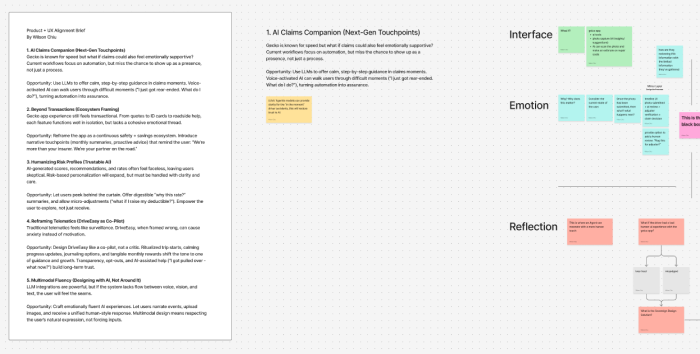

To understand where current AI-driven claim systems were failing, I conducted a layered mapping session that combined interface behavior with emotional response tracing—a practice I call user empathy mapping. The goal was to identify not just usability friction, but the emotional blind spots where trust eroded.

What surfaced was a key insight: users weren’t just missing transparency—they were stuck in emotional limbo, unable to trace how AI reached its conclusions or where they fit in the loop.

A mapping of the AI insurance claim journey.

Interface Layer: What users see and do.

Emotion Layer: What they feel or question underneath.

Memory Layer: What behaviors and assumptions are forming.

Reflection Layer: Where human judgment and trust repair should re-enter.

This visual showed that surface automation often ignored emotional continuity—especially at the “black box” moment when AI made a decision but failed to communicate its rationale. Rebuilding trust meant not just fixing the interface, but restoring the empathy thread.

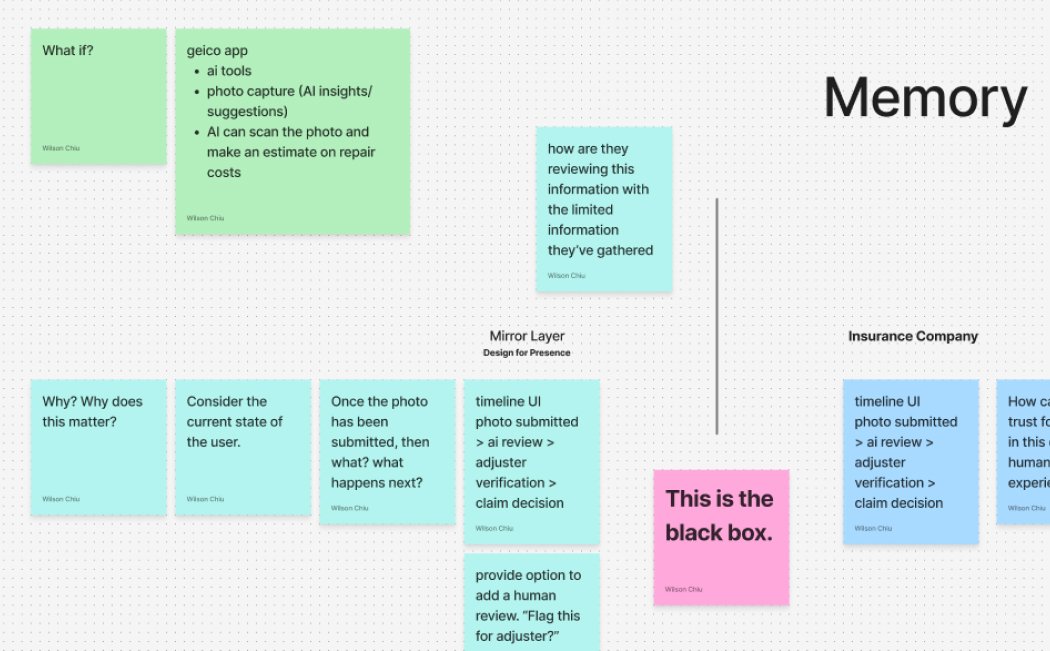

Here, we zoom into the timeline mismatch between AI speed and user emotion. While the system processed instantly, users were left waiting without clarity or closure. This gap—marked “This is the black box”—is where confidence erodes and AI becomes an invisible wall rather than a transparent assistant.

We broke down the moment from both the user’s side and the insurance company’s side, surfacing what each party remembers or sees.

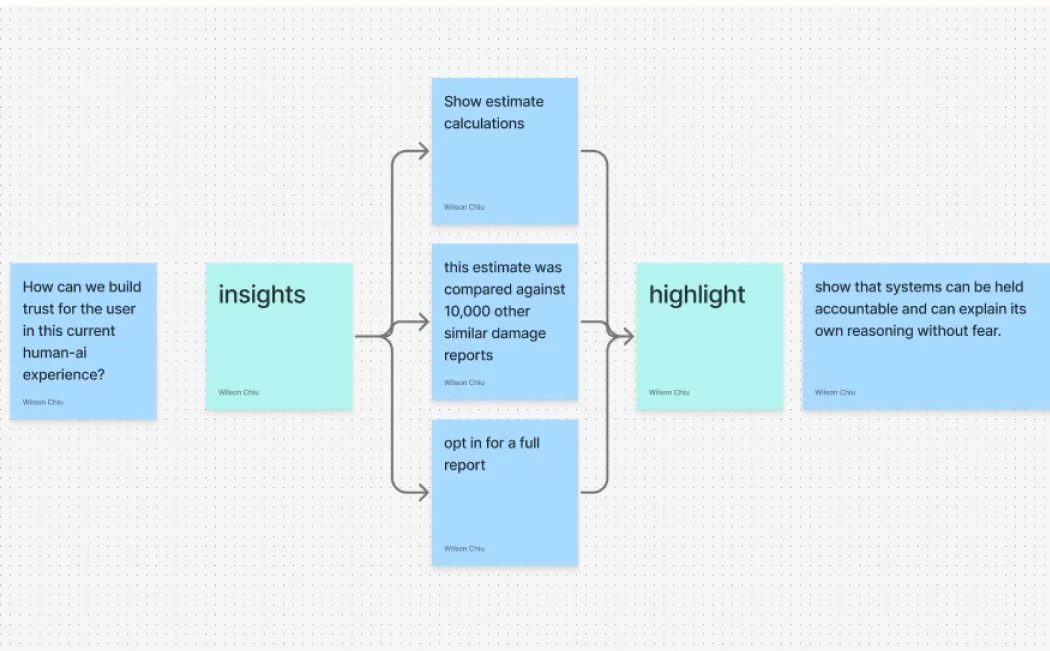

Key question: “Can the system explain its reasoning back to me?”

Outcome: Design needs to highlight reasoning, surface historical comparisons, and allow users to opt in for more clarity.

This breakdown revealed the root cause of distrust: the system made decisions faster than humans could emotionally catch up, with no accountability layer in sight. By mapping both memory and interface perception, we built a prototype that explained itself—earning user trust through clarity, not complexity.

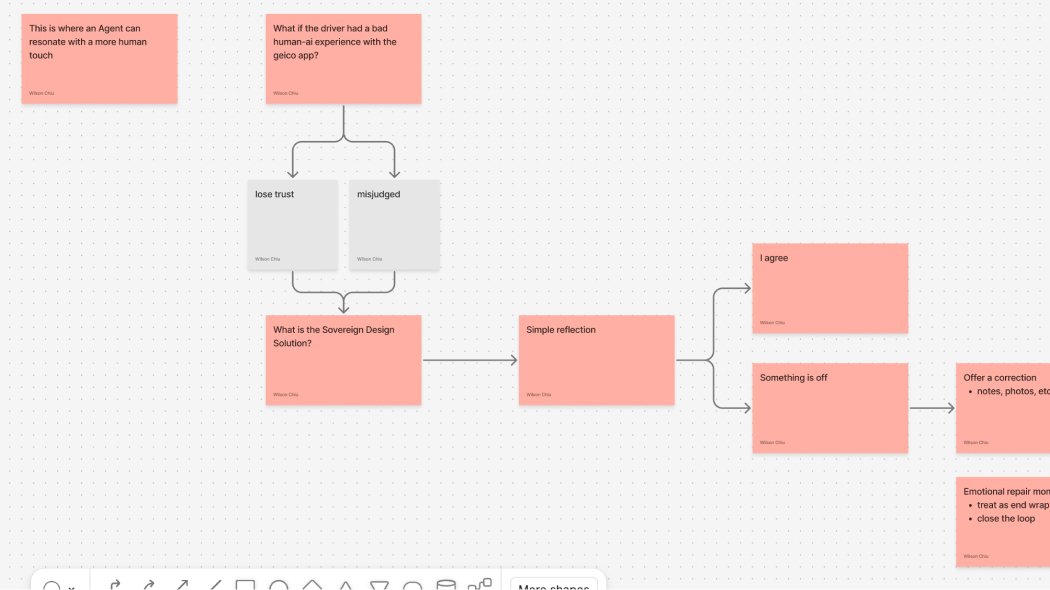

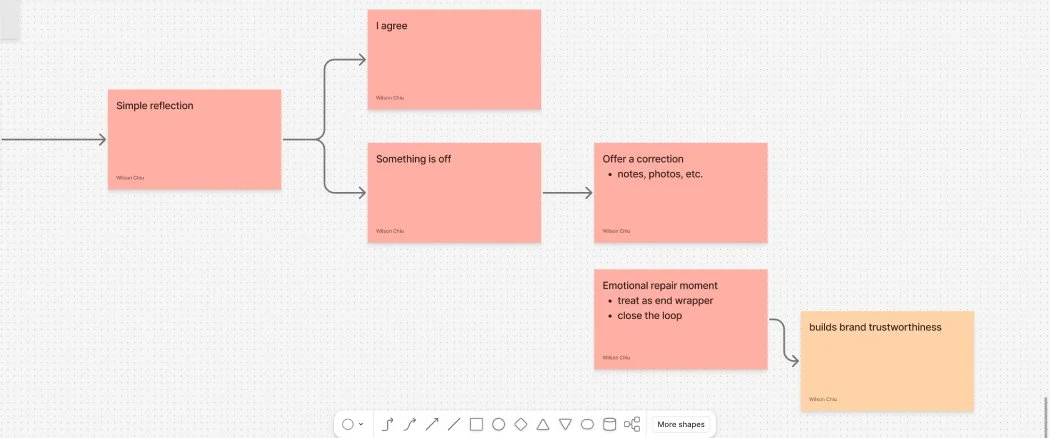

Trust doesn’t end at decision—it’s either rebuilt or lost in the aftermath.

Questions like: “What if the driver had a bad experience?” or “Can we offer a more human reflection?” form the basis of the Sovereign Design Solution shown here.

The goal: bring awareness back into the loop so AI isn’t operating in a vacuum.

This final flow reveals the Emotional Repair Layer: If “Something is off,” offer correction. If aligned, affirm it. Then always close the loop with a signal of reflection.

This act—whether it’s a message, a note, or a human acknowledgment—builds brand trustworthiness by ensuring the experience ends with presence, not silence.

This framework, now integrated into the Sovereign UX toolkit, shows how even the smallest reflection moment can anchor a brand’s credibility. The future of AI UX isn’t just faster automation—it’s visible, human-aware closure.

Research: Insights & KPIs

I conducted a comparative audit of leading insurance platforms (GEICO, Lemonade, Hippo) alongside live prototype testing. While each emphasized automation and speed, none clearly surfaced how the AI reached its decisions. That missing transparency became the central friction point—especially for adjusters signing off on automated results.

To better understand this gap, I mapped the full claim lifecycle across both legacy and AI-powered workflows. The “before” system revealed a deeply fragmented process—built on siloed platforms (CRM, document management, policy admin, general ledger) with multiple handoffs and vague status updates. In contrast, the “after” scenario showed how agentic AI could streamline the journey but risked becoming a black box unless it shared its rationale and confidence.

Paired with empathy mapping sessions, this comparative audit revealed a deeper layer of friction—not just around speed, but around trust, reversibility, and interpretability.

From this emerged a new class of behavioral KPIs tailored for agentic systems:

Escalation: % of user inquiries resolved end-to-end without manager intervention

Confidence: % of high-confidence AI decisions reversed or overridden by human reviewers

Trust: % of users who submit a claim or question and don’t ask a follow-up within 12–24h

Loop Count: Average number of back-and-forth messages in a single inquiry

Edge Cases: % of high-risk claims that surface new, previously unseen combinations of urgency, intent, or policy

These metrics move beyond surface performance to measure emotional trust, signal integrity, and adaptive friction—the human-AI handshake moments that determine whether agentic systems actually feel safe to use.

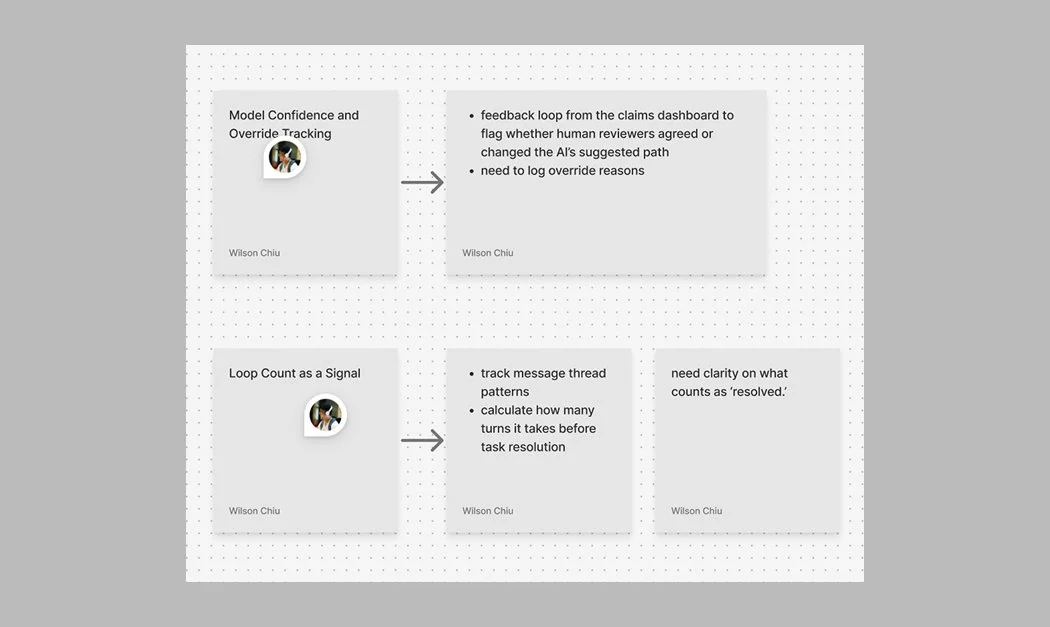

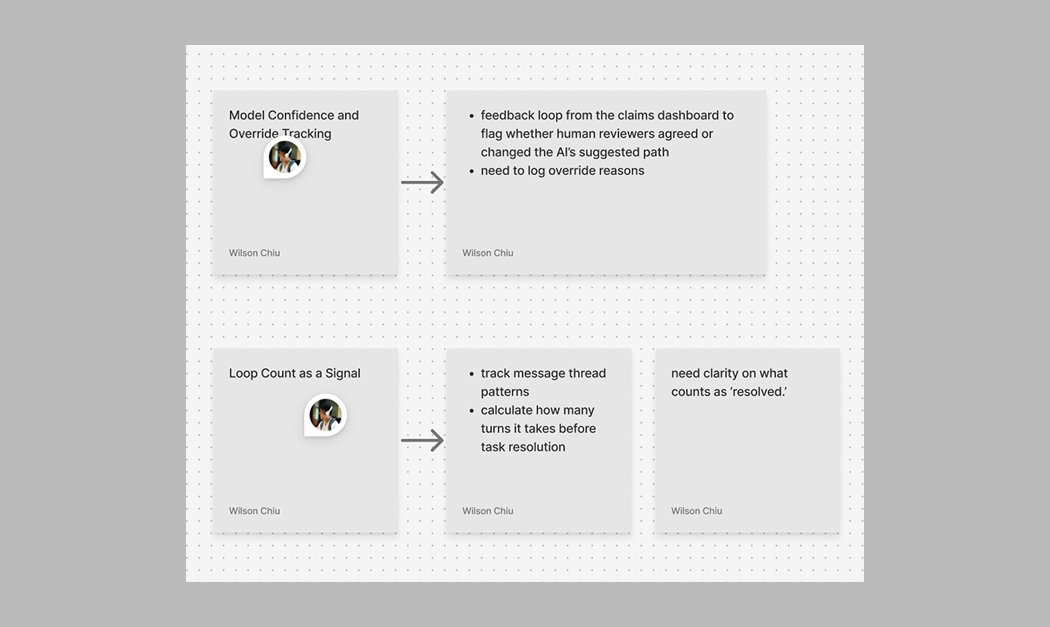

This diagram explores how human-AI disagreement is tracked via override logging in a claims review system. By capturing when human reviewers deviate from the AI’s suggestion—and why—it begins to form a recursive feedback mechanism that improves model behavior and confidence calibration.

This maps out how conversation thread patterns can be used to signal unresolved friction. Loop count—how many turns it takes before resolution—becomes a surrogate signal for emotional latency, model clarity, and UI bottlenecks. This offers both a UX metric and a behavioral insight loop.

User Journey

The post-accident experience is often filled with confusion, stress, and uncertainty. Our solution is designed to bring clarity and reassurance from the very first moment—tailoring the journey for both the driver and the claim adjuster to reflect their distinct needs.

Persona: The Everyday Driver

The typical driver is a responsible adult juggling work, family, and life’s daily demands. They might be a parent picking up their kids, a commuter on their way home, or someone just running errands. In the moment of a collision, everything shifts. Their world becomes loud, blurry, and uncertain.

They’re likely feeling:

Shaken from the sudden impact

Anxious about insurance, blame, and what happens next

Confused about what to document, who to call, and how to do it right

Worried about rising costs or delays that might affect their job or family

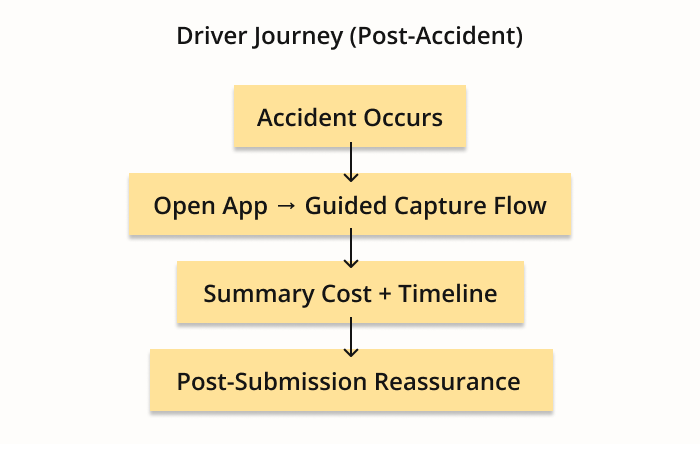

Journey Steps

Accident Occurs

The journey begins at the moment of impact. The driver is in a heightened emotional state—disoriented, possibly in shock. Our goal is to immediately provide a sense of grounding and next steps, minimizing further stress and giving them something to focus on.Open App → Guided Capture Flow

Rather than sorting through menus or legal jargon, the app launches into a calming, step-by-step visual flow. Drivers are gently prompted to take the right photos at the right angles. The interface becomes their guide—not just to collect information, but to feel like someone (or something) has their back.Summary Cost + Timeline

Clarity is offered upfront: a transparent breakdown of estimated costs and a projected timeline. The emotional shift here is powerful—drivers go from “What now?” to “Okay, I see the road ahead.” The chaos gets converted into a plan.Post-Submission Reassurance

Subtle check-ins and notifications act like emotional handrails: letting the driver know their claim was received, being processed, or needs more info. Each message is a touchpoint of care, designed to remove doubt and reduce that lingering post-accident anxiety.

Persona: Claims Adjuster

The adjuster is often overwhelmed professionals handling a queue of cases that never seems to shrink. They're balancing policy limits, legal risk, and human emotion—all while trying to stay accurate, fair, and on time.

They’re likely feeling:

Cognitive overload from juggling multiple platforms and fragmented notes

Time pressure to meet deadlines while maintaining accuracy

Responsibility stress knowing their decisions impact real people’s lives

Distrust in tools that feel outdated, clunky, or opaque

The redesign isn’t just about speeding up claim resolution—it’s about restoring their agency and giving them a control panel that truly reflects the complexity they manage.

Journey Steps

Claim Assigned → Open Dashboard

Instead of jumping between systems, the adjuster sees a unified view: AI-extracted summary, damage visuals, cost estimate, and policy alignment all in one place. There’s less scrambling, more scanning. The shift is from “Let me dig” to “Let me decide.”Evidence Review + AI Support

The adjuster doesn’t just receive raw photos—they see structured context: annotations, timestamps, comparative damage libraries, and red flags flagged by the system. This AI co-pilot doesn’t replace their judgment—it sharpens it. The tool mirrors their thinking, not burdens it.Validation + Communication

Built-in communication threads make it easy to follow up with the driver, loop in repair shops, or request additional context without leaving the interface. It’s a full-loop experience—keeping everyone aligned without resorting to emails or phone tag.Final Decision + Closure

With smart recommendations and transparent audit trails, the adjuster can move forward with confidence—whether approving, escalating, or denying a claim. Each action feels clean, backed by clarity and aligned with policy. Emotional shift: from “Hope this doesn’t backfire” to “This holds up.”

Design Goals

The research revealed that trust gaps existed on both ends of the claims experience—drivers faced uncertainty and silence, while adjusters navigated cognitive overload and fractured tools. These challenges shaped three guiding principles for the redesign—each focused not on speed alone, but on building relational clarity through every touchpoint.

Make AI decisions transparent and grounded in signal.

Every AI-generated estimate now comes with a rationale: confidence scores, editable line items, and model reasoning summaries. These aren’t just for audit—they're for dialogue. By translating machine judgment into human language, the system invites feedback, corrections, and trust.Reinforce adjuster control — AI supports, never replaces.

The system is not a handoff—it’s a handshake. Adjusters remain the final authority, with every correction feeding back into the model’s learning loop. Instead of forcing override battles, the UI respects adjuster expertise while streamlining repetitive tasks. The result: agency without burden.Align tone, timing, and feedback with emotional state.

The emotional context matters: the driver is shaken and uncertain; the adjuster is pressured and responsible. Copy, pacing, and flow were tuned to match each mindset. For drivers: calm, visual guidance. For adjusters: crisp, decisive control. This dual empathy is what makes the system feel human, not generic.

Solution Walkthrough

The prototype explored two complementary journeys — one for the driver and one for the adjuster — connected by a shared design principle: clarity builds trust faster than automation alone.

Driver Journey

1. “I got into a collision.” (Landing Screen)

Driver selects “I got into a collision” on app landing page

The first touchpoint establishes emotional reassurance rather than urgency. Instead of complex menus, the interface uses calm copy and a single, decisive action to help the driver orient.

2. Guided Photo Capture

Capture damages with visual cues

The driver receives step-by-step prompts with real-time visual feedback. The AI gently coaches through subtle cues — not commands — giving users control while ensuring usable inputs.

3. AI Damage Estimate + Confidence Feedback

Estimate summary with 90% confidence badge

After image analysis, the AI displays an estimated cost range and a confidence score with an explanation: “Front bumper damage detected — 90% confidence.” This transparency turns automation into collaboration, helping the driver understand how the system reached its conclusion.

4. Submission Summary + Tracking

Final claim submission confirmation + timeline preview

The confirmation screen closes the emotional loop with reassurance: “Your claim was submitted successfully. Estimated review time: 12 hours.” Visual progress markers replace uncertainty with predictability.

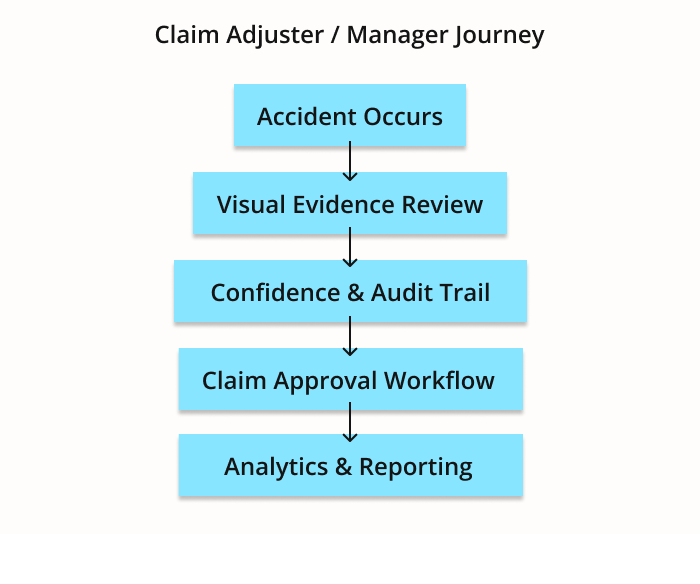

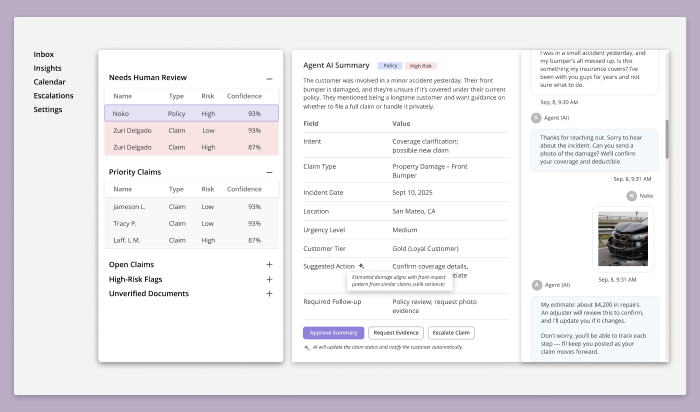

Adjuster Journey

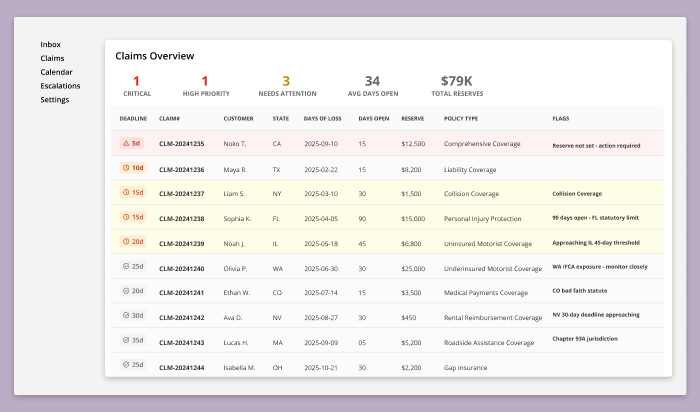

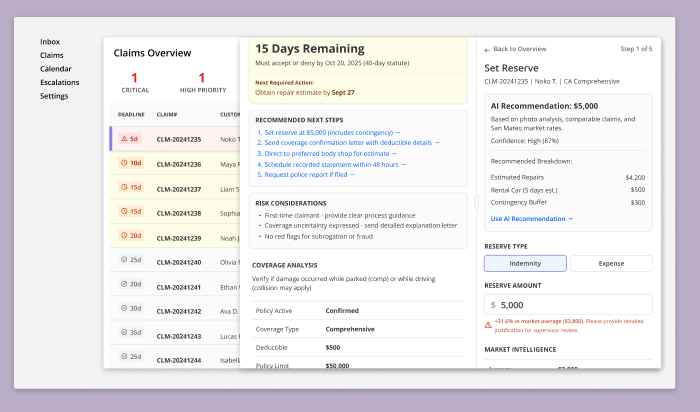

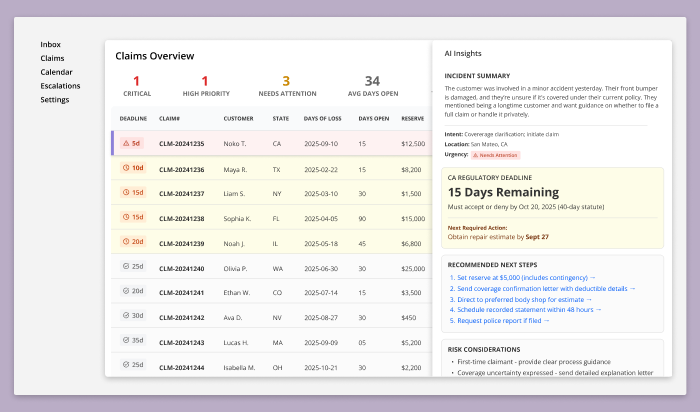

1. Claims Overview Dashboard (Landing Screen)

This dashboard serves as the adjuster’s primary intake and prioritization view. Rather than segmenting work across multiple queues, all active claims are consolidated into a single table to support rapid triage and deadline-driven decision-making.

Priority is visually encoded through deadline-based warning cues, allowing adjusters to immediately identify claims at risk of statutory non-compliance without relying on opaque risk scores.

At the top of the dashboard, aggregate indicators provide situational awareness across the workload — highlighting critical cases, high-priority items, average claim aging, and total reserves — without requiring the adjuster to leave the screen or interpret AI-generated rankings.

This screen intentionally minimizes AI visibility. Its role is to establish clarity, accountability, and control, anchoring the adjuster in regulatory reality before any deeper analysis or system assistance is introduced.

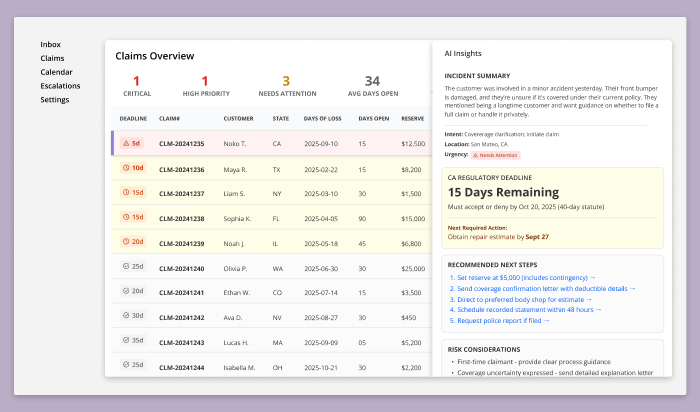

2. Claim Summary & Evidence Review

Selecting a claim opens a contextual drawer that provides a structured summary of the case without removing the adjuster from the claims overview. This interaction allows the adjuster to maintain situational awareness while progressively accessing deeper information as needed.

The drawer surfaces a concise incident summary, synthesizing customer statements, uploaded evidence, and initial system analysis. This includes the detected intent of the inquiry, location, urgency indicators, and a plain-language description of the reported damage—designed to help the adjuster quickly orient themselves without reading through raw messages or documents.

Regulatory context is elevated early. Statutory deadlines and days remaining are clearly displayed, alongside the next required action needed to remain compliant. These indicators are derived from state-specific rules and are presented as factual constraints, not recommendations.

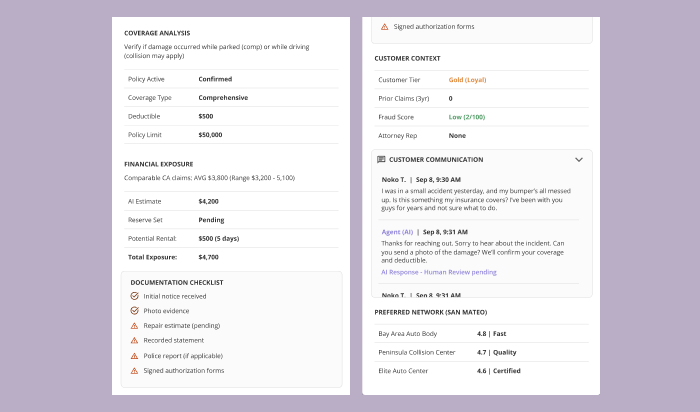

Below the fold, the drawer expands into supporting detail:

Coverage analysis and policy status

Financial exposure and preliminary estimates

Documentation completeness and outstanding requirements

Risk considerations surfaced from claim context (e.g., first-time claimant, expressed coverage uncertainty)

Customer history and prior interactions

Preferred repair networks relevant to the claim location

Throughout the drawer, AI-generated insights are paired with transparent rationale and supporting data. The adjuster can inspect evidence, review how conclusions were formed, and override any assessment at any point. The system is designed to explain why something is suggested, not to silently decide.

This step establishes a clear boundary: the AI accelerates comprehension and surfaces risk, but the adjuster remains the authority responsible for judgment, compliance, and outcome.

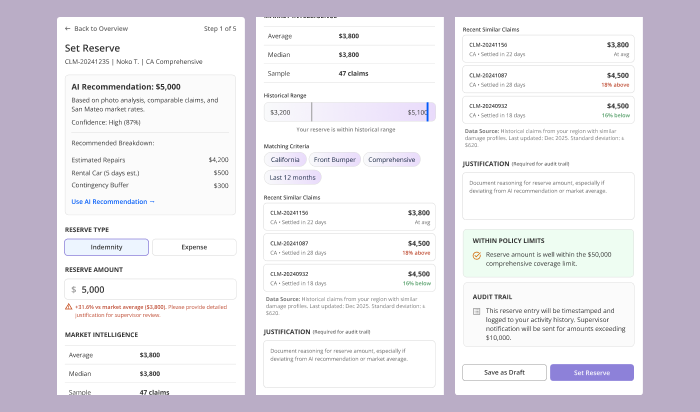

3. Cost Breakdown, Reserve Setting & Rationale Editing

From within the claim drawer, the adjuster can act on recommended next steps—most critically, setting the reserve. Selecting Set Reserve opens a second, focused drawer layered on top of the claim context, allowing the adjuster to work through financial decisions without losing sight of deadlines, coverage details, or prior actions.

This secondary drawer presents an AI-generated reserve recommendation, derived from photo analysis, comparable regional claims, policy coverage, and recent settlement data. Rather than acting as an automated decision, the recommendation functions as a calibrated starting point—clearly labeled with confidence levels and a transparent cost breakdown (repairs, rental exposure, contingency buffer).

The adjuster retains full control. They can:

Choose reserve type (indemnity vs. expense)

Adjust reserve amounts manually

Compare their entry against market averages and historical ranges

Review matching criteria and similar closed claims used by the model

As values change, the system responds in real time—flagging deviations from norms, surfacing supervisor review thresholds, and validating coverage limits. If the adjuster diverges from AI or market guidance, a justification field is required, ensuring decisions remain explainable, defensible, and audit-ready.

Throughout this interaction, AI serves as a financial intelligence layer, not an authority. It exposes benchmarks, patterns, and risk signals while making the adjuster’s judgment explicit, recorded, and accountable. Every adjustment is timestamped and logged, reinforcing compliance and creating a durable audit trail.

This step represents the system’s core philosophy: AI augments professional judgment, but the adjuster remains the decision-maker.

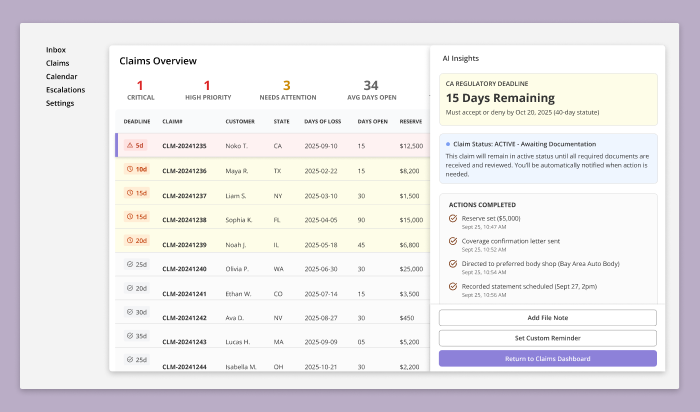

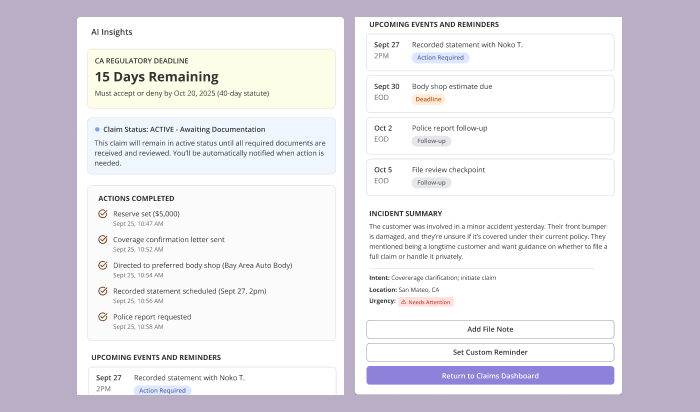

4. Post-Action State: Claim Stabilized & Actively Monitored

Once all immediate actions have been completed—reserve set, coverage confirmed, communications sent, and required documentation requested—the claim transitions into a stabilized active state.

The AI Insights drawer updates to reflect this new posture. Instead of prompting decisions, it now functions as a status and monitoring panel, confirming what has been completed and what remains time-bound.

Key elements surfaced at this stage include:

Claim status (e.g., Active – Awaiting Documentation), with clear criteria for progression

Actions completed, each timestamped and logged for audit purposes

Upcoming events and reminders, aligned to statutory deadlines, follow-ups, and scheduled interactions

A condensed incident summary to re-anchor context without requiring re-review of the full file

At this point, the adjuster is no longer required to “work” the claim continuously. Instead, the system assumes responsibility for monitoring deadlines, triggering reminders, and surfacing new risk signals if conditions change.

The adjuster retains agency through lightweight controls—adding file notes, setting custom reminders, or re-entering the claim if new information arrives—while confidently returning to the broader claims dashboard.

This closing state reinforces the system’s core promise:

once professional judgment has been applied, the platform shifts from decision support to vigilant stewardship, ensuring compliance, continuity, and readiness without unnecessary cognitive load.

Usability Test

Driver Users

I conducted a usability test with three drivers (Jennifer, Derek, Aisha) to evaluate how the agentic claims flow performs under different emotional states, stress levels, and communication styles.

Goals

Assess emotional support at the moment of collision

Validate clarity of steps (photo upload → estimate → questionnaire → submission)

Measure trust in AI damage analysis and estimate breakdown

Evaluate overall transparency and pacing

Key Findings

Empathy must be adaptive.

Jennifer valued emotional grounding (safety check, pause language), while Derek preferred a “skip to claim filing” path. Aisha wanted reassurance tied to clarity and structure.AI photo analysis was the strongest trust driver.

Real-time detection of bumper/hood/headlight damage immediately increased confidence for all personas.The detailed cost breakdown reduced anxiety.

Line items with “why” explanations (e.g., “full replacement for long-term reliability”) worked across all demographics.The adjuster card (name, photo, timeline) was highly effective.

Seeing “Reviewed by Jamie L., within 12 hours” humanized the process and reduced uncertainty.The signature ritual gave meaningful closure.

All three personas preferred drawing their signature over a checkmark; it felt official and intentional.Progress bar accuracy matters.

The only notable friction came from the bar not updating after questionnaire completion (Aisha caught this immediately).

Claims Adjuster Users

I conducted an exploratory usability evaluation using a simulated senior claims adjuster persona (10+ years field experience, multi-state jurisdiction exposure) to assess the usability, clarity, and decision support quality of the adjuster dashboard.

Unlike the driver test, this session focused less on task completion speed and more on decision defensibility, regulatory awareness, and workflow alignment—critical factors in professional claims environments.

Goals

Evaluate whether the dashboard supports real-world adjuster decision-making

Assess clarity of prioritization and claim readiness at a glance

Identify gaps between AI-driven signals and adjuster mental models

Test whether surfaced data is sufficient for regulatory and audit scrutiny

Validate whether the system reduces cognitive load or introduces new risk

Method

Walkthrough-based evaluation using real claim scenarios

Think-aloud critique from an experienced adjuster perspective

Stress-testing interface decisions against regulatory, legal, and audit contexts

Focused on the first 5 minutes of use—where trust is either earned or lost

Key Findings

The initial dashboard was not usable for professional claims work.

While visually organized, it failed to surface the primary drivers of adjuster action: statutory deadlines, jurisdictional rules, and claim aging. The absence of these signals created immediate distrust and hesitation.Adjusters think in deadlines and exposure—not “risk scores.”

Labels like High Risk or Confidence 87% were perceived as ambiguous and potentially dangerous without explicit definitions. Adjusters prioritized:Days remaining before statutory violation

State-specific prompt payment laws

Claim aging and documentation completeness

AI prioritization must be explainable, not implicit.

Adjusters required clear answers to:Why a claim was flagged

What data triggered escalation

Whether the signal was rule-based, AI-generated, or hybrid

Without this transparency, AI signals were treated as advisory at best and liability risks at worst.

Missing identifiers created immediate data integrity concerns.

The lack of claim numbers, dates of loss, and jurisdiction context made it impossible to confidently select the “next” claim to work on—especially when duplicate claimant names appeared.State regulations fundamentally shape workflow.

The session surfaced critical regulatory nuances (e.g., CA 40-day statutes, FL 90-day limits, bad faith exposure thresholds) that reshaped the information hierarchy. These insights directly informed the redesigned table structure and alert system.AI is most trusted when it supports—not replaces—judgment.

The strongest positive response occurred when AI:Explained why a recommendation was made

Exposed comparable claims and market ranges

Required justification when deviating from norms. This reframed AI as a professional assistant rather than an authority.

Design Impact

Insights from this evaluation prompted a full redesign of the adjuster experience, including:

Consolidation into a single, deadline-driven claims table

Replacement of abstract risk labels with statutory countdowns

Introduction of AI Insight drawers for contextual depth—not surface prioritization

Explicit audit trails, confidence explanations, and justification requirements

A shift from “AI-first” signaling to adjuster-first cognition

Key Takeaway

This evaluation revealed that traditional usability testing alone is insufficient for expert workflows. By anchoring the system to an authentic adjuster mental model—legal, procedural, and accountability-driven—the redesign transformed the dashboard from a generic task manager into a defensible decision-support system.

Results & Impact

The outcomes of this project were intentionally evaluated beyond traditional business metrics. Rather than optimizing for efficiency or conversion, the goal was to validate whether Sovereign UX could be applied to an existing, high-risk domain—insurance—to design an agentic AI system grounded in trust, confidence, and metacognitive support.

1. Framework Validation

The primary result was confirming that a layered, sovereignty-first UX methodology can reliably guide the design of complex human–AI systems. By separating surface usability from deeper cognitive and regulatory layers, the framework enabled decisions that were both user-centered and defensible in real-world contexts.

This was validated across two distinct journeys:

Drivers, operating under stress and uncertainty

Claims adjusters, operating under regulatory pressure and legal accountability

In both cases, trust and confidence were not treated as abstract qualities, but as designable outcomes supported by specific interface patterns, pacing, and information hierarchy.

2. Emergent KPIs: Trust, Confidence, and Cognitive Alignment

A key discovery was the absence of established analytics for emerging AI experience metrics such as trust, confidence, and transparency. This project surfaced early indicators that can inform future measurement, including:

Willingness to proceed without escalation

Reduced hesitation at decision points

Acceptance of AI recommendations with visible rationale

Decreased need for external verification

Ability to explain decisions made with AI assistance

Rather than discarding non-performing signals, they were intentionally retained as observed risks—creating a feedback loop for future negative impact analysis.

3. New KPIs Emerged Beyond Traditional UX Metrics

Traditional metrics (task completion time, error rates, satisfaction) were insufficient to capture what mattered most.

This project surfaced new, AI-era KPIs, including:

Driver-side

Perceived confidence in estimate accuracy

Anxiety reduction after cost breakdown

Clarity of “what happens next” at each step

Adjuster-side

Time-to-decision with statutory awareness

Confidence in regulatory compliance

Reduction in cognitive overhead during prioritization

Willingness to rely on AI recommendations with justification present

Many of these metrics are measurable through:

Interaction timing

Override frequency

Justification entry patterns

Follow-up corrections or escalations

4. Persona Simulation vs Traditional Usability Testing

A major inflection point came from comparing traditional usability testing with LLM-based persona simulation.

Without persona simulation, uncovering adjuster-specific insights—such as statutory deadlines, audit exposure, and deposition risk—would have required deep domain access or long-term production learning. In many product environments, neither product managers nor stakeholders possess this level of operational detail.

Persona simulation provided a practical alternative:

a way to forecast expert mental models early, before design decisions became fixed.

This approach directly informed a full redesign of the adjuster dashboard, shifting it from an AI-centric prioritization model to a deadline-first, regulation-aware decision system.

The insight here wasn’t that usability testing failed—it’s that expert workflows require expert lenses.

Claims dashboard v1 prioritized confidence.

Claims dashboard v2 prioritized consequence.

5. Long-Term Impact

The resulting system does more than optimize current workflows—it actively shapes future decision-making:

Users are guided toward better prioritization habits

AI recommendations are framed as collaborative inputs, not authority

The interface teaches users how to reason within complex constraints

Trust is built through explainability, not opacity

In this sense, the design functions as both a tool and a training mechanism—supporting present needs while evolving user mental models over time.

Key Takeaway

This case study demonstrates that agentic AI systems require more than usability—they require cognitive alignment.

By applying Sovereign UX, this project shows that trust, confidence, and metacognition can be intentionally designed, tested, and iterated upon—even in high-stakes, regulated environments.