Executive Summary

The Problem: AI systems are probabilistic, meaning they are guaranteed to make mistakes. A lack of clear, auditable explanation when an error or denial occurs destroys user trust and undermines the integrity of the system.

The Mandate (Recourse): To achieve trust, the system must provide recourse—the ability for a user to understand, challenge, and correct an outcome. This transforms a frustrating rejection into a transparent, accountable decision.

The Strategy: Design must prioritize auditable decisions and graceful error handling. This means every AI decision (e.g., denying a claim) must be accompanied by a clear, traceable reason in plain language, empowering the user with controls to override or correct the system and visibly showing that their feedback is utilized for model improvement.

For executive and product leaders, the pursuit of flawless AI performance is a strategic trap. Since AI systems are probabilistic—operating on likelihoods, not certainties—they are guaranteed to generate errors, failures, and low-confidence outputs.

The true differentiator for market leaders is not preventing mistakes; it is how gracefully and accountably the system fails.

The cornerstone of accountability is recourse: the non-negotiable requirement that a user must be able to understand, challenge, and correct an AI-driven outcome. By embedding this mechanism, organizations can transform inevitable failure into a trust-building opportunity, proving that their systems operate with integrity and respect for user agency.

I. The Trust Crisis of Opaque Rejection

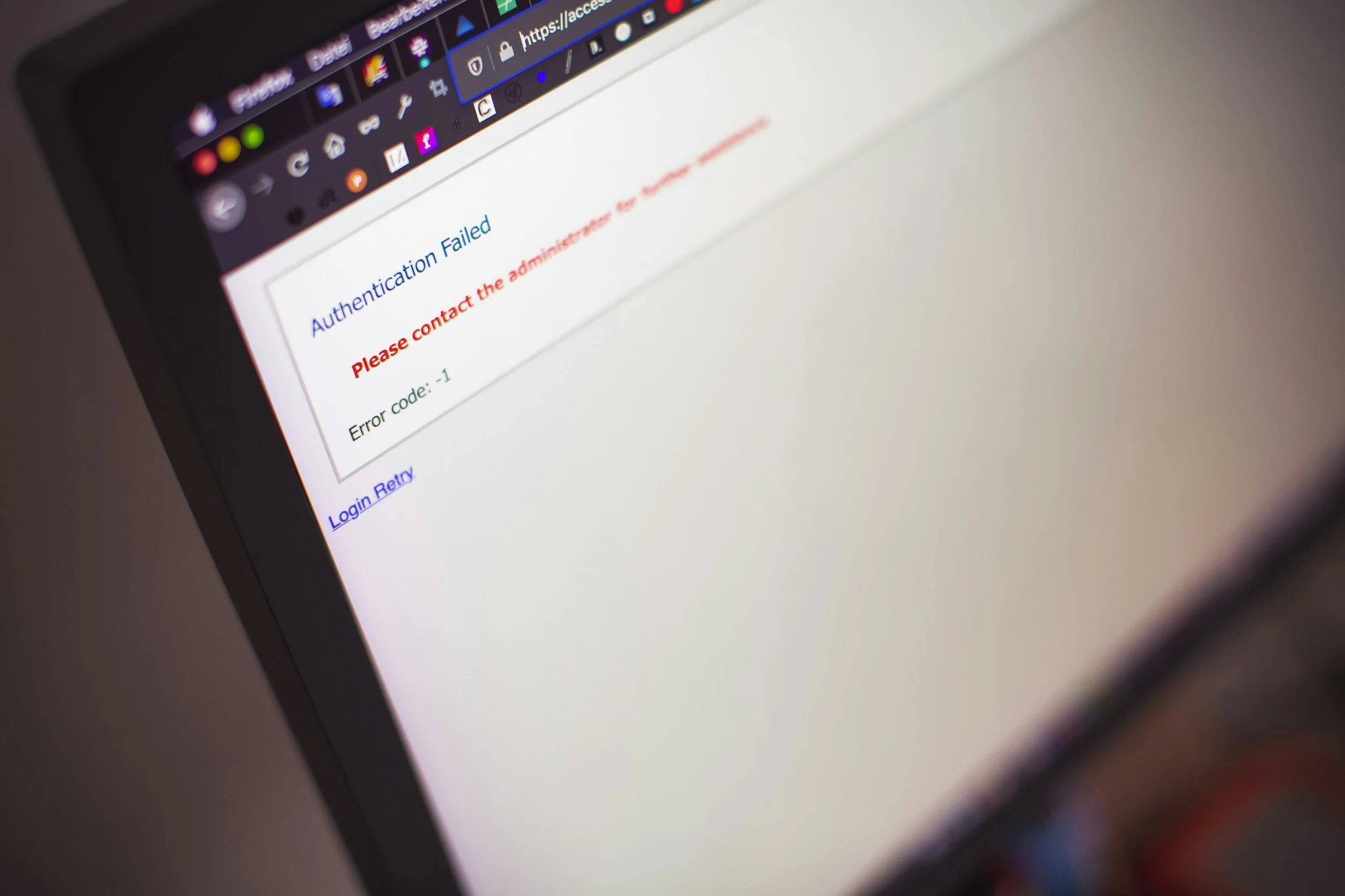

When an AI system operates as a "black box," a rejection (e.g., a loan denial, a triage alert, a product recommendation) feels like an arbitrary and frustrating command. The system violates the pillar of Integrity if it cannot provide a transparent reason for its decision.

1. The Necessity of Auditable Decisions

Recourse is the mechanism that ensures the system is held accountable to the user and to compliance frameworks.

Transforming Frustration into Transparency: If an AI-powered system denies a request, the system must provide a clear, traceable, and auditable reason in plain language. For example, a system denying an expense report should state, “Denied: Expense exceeds quarterly travel budget by 15% as per policy 7.4”. This clarity transforms a frustrating rejection into a transparent, understandable, and auditable decision.

Empowering User Agency: Auditable recourse is essential for Meaningful Human Control (MHC). When users are given a clear path to appeal or correct the AI’s mistake, they retain a sense of control and agency, which directly increases trust.

2. Building Trust Through Failure

Achieving Calibrated Trust requires mitigating both active distrust and dangerous over-trust. The moment of failure is the most critical juncture for this calibration.

Graceful Error Handling: When an error occurs, the design must mandate graceful error handling. The system must humbly acknowledge the mistake (e.g., "I misunderstood that request"), provide clear feedback mechanisms for correction, and visibly demonstrate that the user’s input is being utilized to improve the system.

The Co-Learning Loop: This investment in user feedback is the necessary mechanism that maintains the pillar of Integrity and enables the calibration of the AI's Ability. It shows the user that the system is not static, but is continuously learning from their experience—a crucial component of building a long-term, reliable relationship.

II. The Design Strategy: Controls and Oversight

Designing for recourse requires a commitment to human-friendly controls and a visual language that communicates the system's ability to be corrected.

1. Providing Human-Friendly Controls

Users must feel they can easily intervene in autonomous processes, counteracting the sense of helplessness that complex automation often creates.

Simple Intervention: AI products must feature simple, accessible ways for users to give feedback, direct the AI, and easily step in to take over when necessary. This is crucial for retaining user agency, particularly in systems where autonomous agents may take unexpected or unwanted actions.

Transparency of Confidence: Recourse is often triggered when the user suspects the AI is wrong. To preemptively manage this, the design must use Explainable AI (XAI) patterns that communicate the AI’s confidence level using visual cues (bars/badges) or natural language, giving the user a transparent basis for deciding whether to intervene.

2. The Hybrid Oversight Model

In high-stakes fields where high certainty and auditability are required (e.g., financial services), the most practical solution is the hybrid human-AI model.

Accountability Through Review: These models combine the analytical speed of AI with essential human oversight. The human expert remains indispensable for considering ethical factors, weighing real-world context, and making the final decision, ensuring that accountability is preserved.

Efficiency Driver: As proven by case studies, this oversight is not a regulatory drag; it drives efficiency. The human analyst’s final sign-off—enabled by the AI’s transparent, pre-assessed data—converts a potential compliance failure into a reliable, high-speed decision, maximizing operational agility while minimizing legal risk.

III. Conclusion: Recourse as a Strategic Asset

The Recourse Requirement is the strategic mechanism that institutionalizes accountability, transforming probabilistic outputs into auditable, trustworthy decisions.

For product leaders, the commitment to designing for accountable failure is a commitment to sustainable user loyalty. By providing clear controls and transparent explanations for every outcome, organizations can ensure their AI systems are not only fast but also fundamentally fair, compliant, and—most importantly—trusted collaborators.

Sources

https://www.mmi.ifi.lmu.de/pubdb/publications/pub/chromik2021interact/chromik2021interact.pdf

https://medium.com/biased-algorithms/human-in-the-loop-systems-in-machine-learning-ca8b96a511ef

https://blog.workday.com/en-us/future-work-requires-seamless-human-ai-collaboration.html

https://cltc.berkeley.edu/publication/ux-design-considerations-for-human-ai-agent-interaction/

https://www.cognizant.com/us/en/insights-blog/ai-in-banking-finance-consumer-preferences

https://arxiv.org/html/2509.18132v1

https://www.cognizant.com/us/en/insights-blog/ai-in-banking-finance-consumer-preferences